When Is Serverless More Expensive Than Containers?

I talk to a lot of people about serverless. Shocking, I know.

When discussing the viability of serverless for production applications, I’m often hit with the same two arguments:

“Cold starts are so bad we can’t use serverless” and “aren’t you worried about the cost at scale?”

We’ve already covered why we should stop talking about cold starts. But the question about cost at scale is one we haven’t covered yet.

It’s also not a black and white argument.

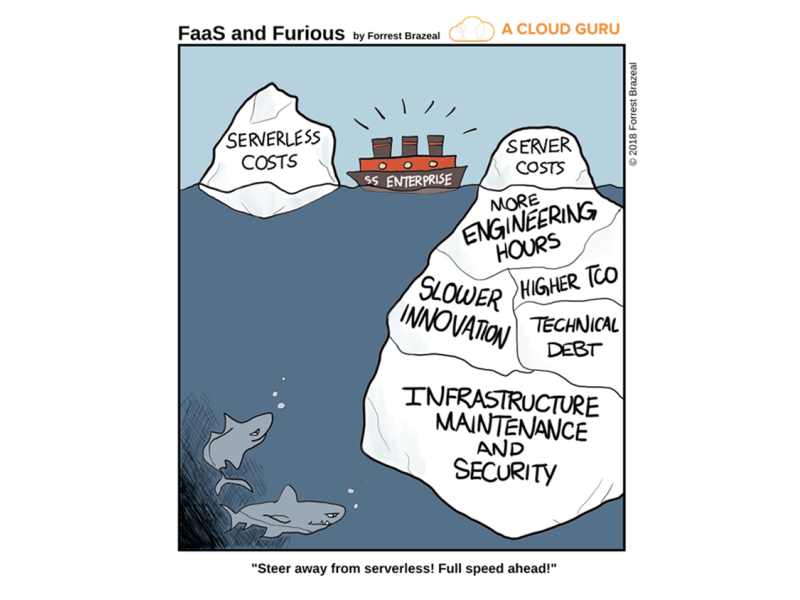

Serverless applications offer significant total cost of ownership (TCO) advantages compared to containers. You don’t have to spend time (which consequently is money) with server maintenance, installing patches, rebooting services in an invalid state, managing load balancers, etc…

But for the sake of the argument, we can compare the total bill and put the other contributing factors aside. Those can be hard to objectively prove when you haven’t sold your audience on the concept yet.

So comparing strictly dollars and cents is our objective. Again a difficult concept to prove. Comparing provisioned services like EC2 with a pay-for-what-you-use service like Lambda is difficult but not impossible to do.

I often hear that serverless is expensive “at scale”, but nobody can effectively communicate what that amount of traffic actually is.

I had a great discussion with Jeremy Daly and Yan Cui on the subject a couple weeks ago. We all arrived at the conclusion that there are certain workloads that are significantly more expensive to run serverless, but the tipping point is usually difficult to objectify. Best thing we can do is analyze our spend on a heuristic-based approach.

Let’s dive into some numbers.

Comparing EC2 and Lambda

DISCLAIMER - I know there is a significant amount of variance in actual implementations, and no calculations I perform will match everyone’s use cases. This is meant to be a generalized example to show a relative amount of scale where a serverless implementation results in a higher AWS bill.

The two applications we will compare is a load balanced EC2 fleet optimized for compute against a serverless app backed exclusively by Lambda functions.

We will ignore egress data charges and CloudWatch charges since both of those will be present in both apps. The architecture we are comparing is as follows:

Architecture diagrams of the compute we are comparing

To simulate a production application, the fleet of EC2 instances will be deployed in multiple availability zones and routed via an application load balancer. These instances will be general purpose and optimized for microservice usage, so I opted for M6g instances.

M6g.xlarge instances are priced at $0.154 per hour on-demand. In our scenario, let’s imagine we average 2 instances running in each of our two AZs 24/7. The application load balancer is $.028125 per hour, plus LCU’s. Assuming 50 connections per second that last for .2 seconds and process 10KB of data each, our cost to run is approximately:

($0.154 EC2 per hour x 24 hours x 30 days x 4 instances + (($0.028125 ALB per hour + $0.01 x 3.3 LCU) x 24 hours x 30 days)= $487.53

So $487.53 a month for a load balanced fleet of 4 general purpose instances. Remember, this is for compute only, we haven’t factored in things like EBS volumes, data transfer, or caching.

We assumed when pricing the ALB that there would be 50 connections per second that lasted for .2 seconds each, so we’ll take that number into consideration when calculating the Lambda costs.

Lambda is priced per GB/s and invocation. For our calculations, we will assume our functions are configured to use 1024MB memory.

50 requests per second (RPS) sum up to a total of 129.6M requests per month, which we will use below.

$.0000000133 x 200ms x 129.6M invocations + (129.6M/1M x $.2) = $370.65

So at 50 requests per second, serverless adds up to a smaller bill than our EC2 fleet, but using these calculations we can easily calculate the point where the bill flips to EC2 becoming a “less expensive” option if optimizations are not made.

Using this little calculator we can see that once you reach an average of 66 requests per second, serverless becomes more expensive.

Comparing App Runner and Lambda

AWS App Runner is a relatively new service that went generally available in May of 2021. It is a managed service that builds, deploys, load balances, and scales containerized web apps and APIs automatically.

Pricing is a little simpler and less variable with App Runner than EC2. You pay for compute and memory resources consumed by your application, similar to Lambda. You pay $.064 per vCPU-hour and $.007 per GB-hour.

To match the example app we were pricing above, we will configure our containers to run 2 vCPUs and 4GB of memory.

Each container in App Runner can handle up to 80 concurrent requests per second. Our example app operates at 50 requests a second, so we can estimate costs just on a single App Runner container.

($0.064 x 2 vCPUs) + ($0.007 x 4 GB memory) x 24 hours x 30 days x 1 container instance = $112.32

From our example above, we already know that Lambda is more expensive to operate at $370.65. So in this case, App Runner is less expensive to operate. Using our calculator from before, we can determine that applications running 15 requests per second or fewer will be cheaper to run on serverless with this configuration.

Summary

The most important thing to remember is TCO. There is much more to cost than just the number at the bottom of your monthly bill. Ongoing maintenance, slower development times, complex networking, etc… all play a part in how much an application actually costs to run.

The examples above are simple and vague. By no means are they intended to be full detailed examples of a real production application. That would be ridiculously complex and hard to follow in a blog post. The point of the post is to show you that there is a point where compute becomes more expensive when you go serverless. But sometimes that is ok!

Many applications will never see the amount of traffic required for the shift to occur. In our EC2 example, we had to surpass 170.2 million requests per month to reach the turning point. While that is certainly an attainable number by some, it might not be realistic for many. Early phase startups would get a significant cost reduction by starting serverless and switching to App Runner when they reached a scale that made sense.

Speaking of App Runner, we saw that it cost about 25% of the EC2 spend to support the same application. If you are going to stick with containers (and there’s nothing wrong with that, I promise) consider App Runner instead of diving into the complexities of EC2.

If you want to do calculations on your own, I encourage you to try out the calculator I built. Enter your current provisioned service spend, adjust the configuration for Lambda, then run the script. It will tell you what the turning point is when going serverless.

There’s a whole science to accurately predicting costs and comparing all the ways you get charged with AWS. This is intended to give you a rough idea of what “at scale” means when people tell you serverless is more expensive at scale. There are many other important factors to consider when doing a full cost analysis.

Serverless costs are linear with usage, it does not get more expensive the more you use it. Oftentimes, it actually gets cheaper! Running into a situation where serverless becomes too expensive feels like a good problem to have. That means your app is gaining popularity and a new set of challenges comes into play.

Happy coding!

Join the Serverless Picks of the Week Newsletter

Thank you for subscribing!

View past issues.